Denise Smith: ‘It took just seconds to generate a sleazy deepfake porn image of me online’

Sleazes are paying $30 to deepfake websites to strip women of clothes... and victims’ ‘naked’ pics are being shared on porn sites

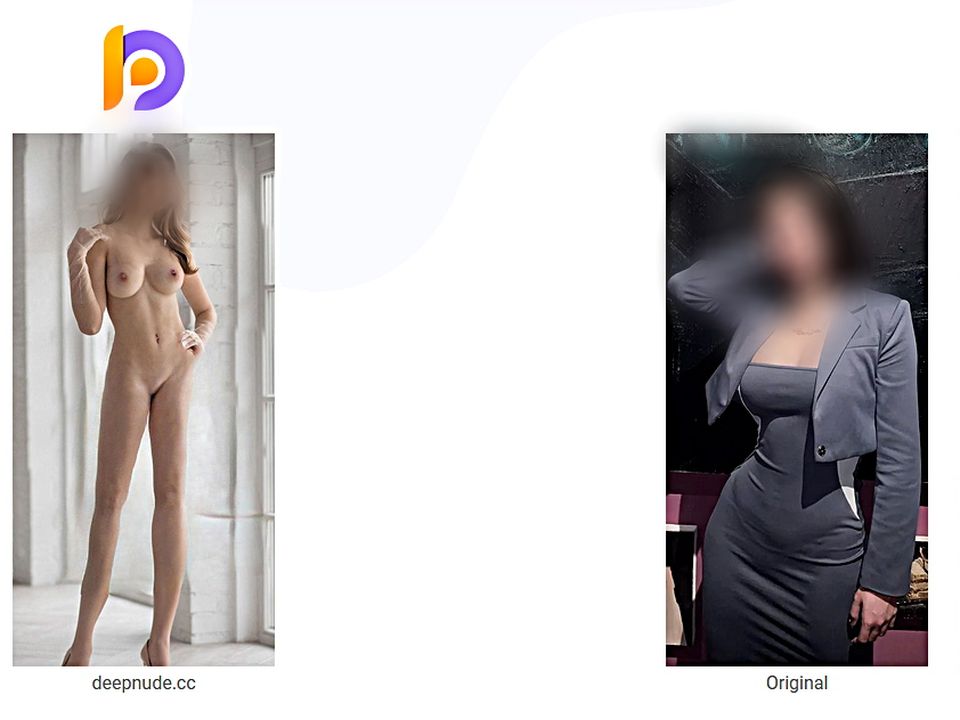

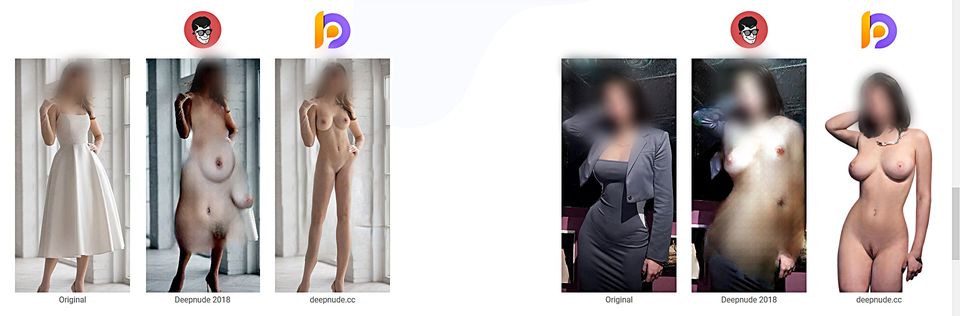

Arms folded and eyes smiling, I look completely at ease commanding a boardroom, but there’s one major issue with the photo that’s flashing up on the computer screen before me — I am completely naked.

In less than 15 seconds I have been digitally undressed by an easily accessible AI tool and I am horrified at the computer-generated clone staring back at me.

Welcome to the seedy underworld of deepfake porn — technology that uses ever-improving artificial intelligence that automates entirely realistic nude images of women and inserts their likeness into hardcore pornographic videos.

Today, doctored nudes and sexually explicit videos of ordinary Irish women are infiltrating the internet at an alarming rate and can be created for as little as €30 thanks to ground-breaking software.

Once solely targeted at A-listers including Taylor Swift and Scarlett Johansson, Irish presenter Vogue Williams recently spoke of her disgust at being digitally inserted into disturbing pornographic content.

And now the deepfake web is targeting ordinary women and it could be you, or me.

Our reporter Denise uploaded this image

Swiping pictures from Irish women’s Instagram accounts and uploading them through advanced apps online, twisted perverts intent on getting their sick kicks use lifelike pictures and videos for sexual gratification or to manipulate, control and destroy their victims.

Horrifyingly, many women are completely oblivious that their computer-generated nudes are now being traded in underground forums and appearing on porn sites.

A quick Google search leads me to dozens of sleazy websites that boast the software that can digitally undress women in an instant.

One depraved site that profits on the degradation of women even offers ‘customised fakes made by top-rated artists.’

Charging upwards of €40 for a picture, the website says: “Have your photos made fake nude, covered in fake c*m, or edited in any other erotic way you desire.’

Screenshot from the 'Fake Nude' website

Read more

Repulsed, I keep scrolling until I find a website that offers a "nudifier app.”

Detailing its updated service, the website details: “See any girl nude with the click of a button. The most powerful image deepfake AI created.’

There are three payment rates: the basic package starts at $29.95 all the way up to the deluxe package at $99 and the website promises real results including: “Better breast size prediction based on shadows and curve of clothing.’

I opt for the basic tier and grimace as I upload a professional photo of myself standing in a boardroom wearing a simple blazer dress.

Punching in the last number of my credit card and pressing process, I fork out over €30 and watch in horror as flesh-coloured skin bleeds through my blue dress.

Within seconds, the soft curve of breasts appear, followed by smooth, dimple-free limbs until I am completely naked. It is me, but it is not me.

Hauntingly life-like, my body has been super-enhanced — preened, pumped and processed to satiate the male gaze. To the untrained eye, save for glitches around my folded arms, nothing would be amiss.

A dodgy website and €30 later, I am now enmeshed in the non-consenual deepfake machine.Technology that is now being weaponised against women and is ruining lives.

In the 15 seconds it took to strip my body bare, I calculate how quickly one image could be shared across the internet — a simple action that has the potential to eviscerate someone’s entire life.

And for that reason alone, I refuse to disclose the names of these platforms.

Deep fake site produced this image of our reporter Denise Smith

But that will not deter perpetrators from targeting real women.

Speaking last year, Dublin celebrity Vogue Williams opened up about her face being used in ‘deep fake’ pornography.

“I saw my face plastered on all these porn images and I was like: ‘Jesus, I don’t remember that’,” she joked.

“About three weeks ago, it came up again and they’ve updated the picture and I mean, if I thought I was having a good time before, I’m having a really good time in these ones.”

“I’m adventurous but not that adventurous,” she added.

Despite trying to laugh at the situation, Vogue explained how she struggled to get the images taken down and said she didn’t want her children to see them.

“You know what the weirdest thing is? You can’t get them down, like they’re just up, anyone is allowed to put them up, and they are starting to look more realistic.”

“The most recent ones look so realistic and I can kind of laugh about it but at the same time I hope they come down at some stage.

“I don’t want my kids to see them,” she said.

A 2019 study by Deeptrace Labs found that 96 per cent of all deepfake videos were pornographic and non-consensual. Alarmingly, the top four deepfake websites clocked 134 million views on such videos.

Tech expert Dan Purcell, who has become an ally to women everywhere after shutting down an online server containing hundreds of thousands of revenge porn pictures of Irish women last year, said the technology behind deepfake porn is ‘only in its infancy’.

The CEO of the anti-piracy and privacy protection service Ceartas is warning that the depraved act of deep faking could now be a gateway to real-life crime against women:

“It is so realistic looking that it may as well be real,” he said.

Offering a free service for victims that desperately want their images scrubbed from the internet, the tech genius said: “We don’t sugar coat anything, it’s 99.9 pc men, men are the perpetrators and it is at scale.

“They are turning these girls into currency. You have these people that are good at Photoshop and they will go to these deepnude, deepfake websites and put a girl in at the request of someone from Reddit or Telegram and clean it up and make it look very real.

“The men are anywhere from 16 to 30 and some of conversations make me think what kind of breed they are.

“They are asking, ‘Are there any girls from Donegal or Derry or my town? I think it is voyeurism and kink for them because they could walk by this girl and think ‘I’ve seen you naked’.

'I saw my face plastered on all these porn images... can kind of laugh about it but at the same time I hope they come down at some stage – I don’t want my kids to see them' – VOGUE WILLIAMS

“These guys are in these groups and they do tributes. They will message different lads and say ‘do you want to have a w**k’ and they will simultaneously masturbate.

“What frightens me is that these guys are really starting to blur the lines. They are masturbating collectively and printing the pictures off and masturbating on them. It’s not outside the realm of possibility that a sexual assault will happen.

“These guys also have no accountability and there is so much anonymity online and the mindset is ‘what else can I get away with?’”

Lobbying social media giants to create a framework of accountability, he urged: “What I would suggest for Twitter, Instagram and Facebook is that when you report an image their systems should automatically remove access instantaneously until a decision is made; guilty until proven innocent. That would eliminate so many problems.”